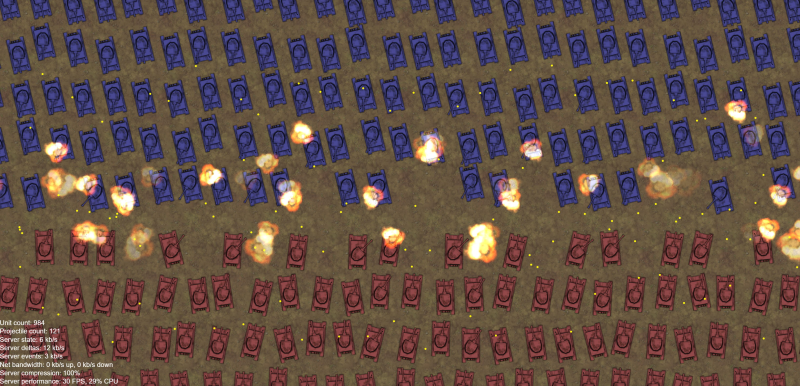

After the last devlog I had the basic controls for scrolling and zooming a larger level. Mostly for a laugh, I threw in 1000 units and set up a huge battle. That's where things start to get interesting.

Performance

First of all I assumed performance would pretty much grind to a halt at this point, as I had written simple, inefficient algorithms to get things off the ground quickly.

Instead it kept running perfectly smoothly.

It's using more CPU than it needs to, but the framerate was fine, both on client and server. Even though I've previously blogged about how fast JavaScript is, this still surprised me! For units to find targets they currently just naively check every single unit in the game every server tick. With 1000 units that means it does about 1,000,000 iterations per tick to find targets. JavaScript is so ridiculously fast it blitzes through that in about 10ms. Projectile collisions use a similar brute force approach and take less time than that even in intense combat.

Pretty awesome.

I had assumed I'd need to do some intensive optimisation at this point, but... it's kind of fine for now. I will do that later, but instead I'll move on to tackling a trickier problem: bandwidth management.

Bandwidth for 1000 units

First of all, I wanted to set a bandwidth goal: what's the most data the game should be sending, even with loads of action going on? I wasn't very sure about this, as it's hard to know what most Internet connections in the world can manage. I decided to settle on always coming under 100KiB/s as the goal, as that is approximately what a SD 480p (aka potato quality) video stream uses, and it seems reasonable to expect all modern devices and connections to be able to handle that.

Researching existing RTS games

I did some reading up on how other RTS games manage their bandwidth. I read several interesting blog posts about the networking design of games like Age of Empires and StarCraft.

These older games use a fairly unusual design. Especially in the 90s, there simply was not the bandwidth available to send lots of game data. Players would commonly have dial-up modems which you'd be lucky to get 5KiB/s with. There was no hope of streaming data for several hundred units. So they used a system where all player's games advance in lockstep, with completely deterministic gameplay, and only player inputs are transmitted over the network.

I've been programming a long time, and I think I have a fairly good sense of what various algorithms and technologies involve, and to me this sounds like... a nightmare! Indeed the blogs were full of tales of entire teams of developers struggling for weeks to fix obscure de-sync bugs that only happened after an hour of gameplay. I don't want to get mired in such difficult problems for a part-time project like this, and I'm willing to sacrifice some game capacity or simulation quality if necessary to avoid that. I'm going to use some form of streaming all the unit data, just because it's so, so much simpler. It also has advantages like allowing late-joining players, including as a spectator (whereas lockstep-style requires everyone there at the start and to follow every single update).

It seemed all the modern RTS games I could find information on still used this architecture, even years later. So I also wondered: has that ended up just being "the way it's done" without anyone reconsidering? I mean, we all have broadband now, and even just 100KiB/s is 20x what you could get out of a dial-up modem back in the day. So maybe it's time someone had a go at doing it differently! I don't know if I'd really be the first person to do a large-scale RTS this way, but if I was that would be kinda cool.

Sending compact data

So as a baseline, I started with sending all data for all units every server tick. However to keep the messages as small as possible, it sends the data in a binary format (using DataView to read and write binary data types).

In JavaScript most numbers are double-precision floats (aka float64), which take 8 bytes. They're incredibly precise and have a huge range, but 8 bytes is pretty wasteful for many values. I was able to use unsigned 16-bit (aka uint16) numbers for most values, which have a range of 0-65535 and only take 2 bytes. This includes:

- Unit IDs (so you can only have up to 65535 units... but that's a lot)

- Positions (so you can only have a level up to 65535x65535... but that's pretty big)

- Speeds (values in pixels per second need to go beyond 255, and up to 65535 is more than enough)

- Angles (using 65536 degrees in a circle)

The most interesting one is angles. Most programming languages use angles in radians, which have a range of 2π (about 6.28). Using a single-precision float (float32) to store that still takes 4 bytes, and wastes a lot of the range and precision. Using a 16-bit integer means essentially using 65536 increments in a circle instead of 360. That means each increment is equivalent to about 0.0055 degrees. Travelling a distance of 10,000 pixels at 0.0055 degrees will travel 9999.99995 pixels horizontally, and about 1 pixel vertically. So this seems like pretty good precision actually - even over very large distances, the amount of error from rounding the angle to a 16-bit value should only be in the region of a pixel or two.

The code to send a full update for a unit is in the unit WriteFullUpdate() method on the server, and it's read in GameClientMessageHandler's #ReadFullUnitUpdates() method on the client.

Full data streaming bandwidth

So once I had a relatively compact data representation, sending full data for all 1000 units every server tick (30 times a second) amounted to around 350KiB/s bandwidth. Not good enough! We'll need to be smarter.

Compression

My first thought was: just compress it! The data can be run through a compression algorithm like deflate, transmitted over the network, and then decompressed on the other end. If that brings the bandwidth down enough, why try harder? There's also the Compression Streams API that (some) browsers provide, so there's a convenient built-in API to do the compression and decompression.

I was thinking about implementing this for the game, and then I realised: why not add the compression in Construct's Multiplayer object instead? Then all Construct projects get the benefit of bandwidth compression. So I did! It was quite complicated, but that's now supported in r319+. It's a nice feature as you don't need to do anything, it just automatically saves you bandwidth by compressing every message. This project benefits from that but so does everyone else using Construct, and these kinds of Construct improvements along the way are part of what this project is about!

After implementing compression, it turns out sometimes it can save loads of data (up to 75%!), but usually it's around 20-30% saving. The bandwidth for 1000 units was now running around 280KiB/s. Better, but still not under the target. We still need to be smarter.

Full vs. delta updates

So I decided to go with the following strategy:

- Rather than sending full data for all 1000 units every tick, spread them out over a time period, say 2 seconds. So in this case it'll send data for about 17 units every server tick, constantly rotating through the full unit list.

- In between, send updates for only the values that are changing, for example the turret angle. These are referred to as delta updates.

Sending 17 units per tick instead of the full 1000 uses a tiny amount of bandwidth - just 6KiB/s in this case. So that's a great baseline, well under the target. This approach basically means all units get a full update every 2 seconds, and clients only need to predict up to 2 seconds of motion using delta updates. Even if something goes wrong, it will only be a second or two before it gets corrected.

Importantly, delta updates do not send positions. These are constantly changing for a unit moving in a straight line. However the client can keep units moving at the same speed without needing position updates. This means both units that are stopped, or are moving in a straight line, don't need any data to be sent for them at all beyond the occasional full updates. This alone can save a great deal of bandwidth.

It's kind of funny to play the game with only full data updates rotating around every 2 seconds. It's super laggy, but uses a tiny amount of bandwidth! You can see units move smoothly as the client receives full updates indicating the speed and angle, but then they overshoot their target position and get moved back.

All the projectiles and impacts use a different approach of sending one-off "network events" - a list of events such as a projectile being fired, or hitting something, send at the end of the tick they happened in. So those parts of the game still work smoothly even with these irregular unit updates. These network events use a surprisingly small amount of bandwidth - also around 6KiB/s even intensive combat - so this does not seem to warrant further optimisation, at least for the time being.

Delta updates

In between full updates, units track if anything about them is changed. At the moment this is just three values: the platform speed, the platform angle, and the turret offset angle (which is relative to the platform angle). At the moment the speed rarely changes, as there is no acceleration: it's set once when it starts moving and once when it stops.

The other two values needed a bit more optimisation. Firstly the platform angle does continuously change while units move, but only because of floating point precision errors. Every tick it recalculates its angle to the target, and each time the calculation produces an ever-so-slightly different result. These tiny fractional changes at the limits of double precision floats shouldn't cause the platform angle to be re-sent. So instead it tracks the last angle it sent in uint16 format, and only re-sends the angle if the value as a uint16 has changed. That solved the problem and ensured only meaningful platform angle changes are transmitted.

Turret offset angles suffered a similar problem in combat: there was so much turret rotation going on that thousands of delta updates would be sent per second. The solution was slightly different. Turret angles are actually purely cosmetic - when a projectile is fired, the network event includes the angle of the projectile. So turret angles can be a bit off, and it won't really matter. So turrets only send delta updates for their angle if they rotate beyond a threshold - I went with 0.5 degrees - beyond the last angle that was sent. In short this means as they rotate they only send updates every 0.5 degrees of change, which makes them a tiny bit choppy. However it's hard to notice, and it saves loads of bandwidth. When clients do network smoothing, this will probably get smoothed over as well, so it'll matter even less.

Delta-updates are sent by the unit WriteDeltaUpdate() method on the server, and read with GameClientMessageHandler's #ReadDeltaUnitUpdates() method on the client.

End result

So to conclude, the server limits bandwidth with:

- Only sending full updates for all units over a period of 2 seconds

- Sending delta updates for values that change in between, and only when meaningful changes happen

- Sending one-off network events for things that have happened, like a projectile being fired

- Compressing all the transmitted data

Now even in intense combat, everything updates in real-time and the bandwidth peaks at around 50 KiB/s - which is how much is used in the first video in this post, with intense combat involving 1000 units.

Intense combat with 1000 units at just 50 KiB/s bandwidth

Intense combat with 1000 units at just 50 KiB/s bandwidthThe rest of the time the bandwidth used is much lower. Target achieved! This might need more updates as the game develops further, but it looks like this approach can work well. If this scales, it seems likely a truly ridiculous scale of game with 10,000 units all in combat at the same time could be done with 500 KiB/s bandwidth - still achievable on many connections. That would be crazy.

That's all for this time. I haven't updated the game on CommandAndConstruct.com yet as the CPU usage is still a bit high and might cause unnecessary battery drain or heating up devices until I get round to optimising it... which will be my next task! Meanwhile as ever, the code is all on GitHub.